How Latimer.ai is Rewriting AI’s Score

John Pasmore

We continue our series on AI and leadership with serial entrepreneur John Pasmore, co-founder of Oneworld, a late '90s youth culture and hip-hop publication that focused on social and political issues from a multicultural perspective. He also served as co-founder and CEO of the venture-backed, video-based travel platform VoyageTV, which was sold in 2013.

His current venture is Latimer.ai, named after the legendary Black American inventor Lewis Latimer.

Greg Thomas: John, tell us why you started Latimer.ai several years ago.

John Pasmore: I started it for a couple of reasons. Number one, I thought that we could successfully build an AI company. Number two, looking at the landscape when OpenAI first launched generative AI [in November 2022], it was pretty clear that the product did not represent Black and Brown people fairly or accurately. And I have a teenage son. I just thought that if we embed bias in this technology, that’s way worse than everything that we’ve already seen in society. So, I thought we could have a positive impact. We jumped in, and we started Latimer. We launched in January 2024.

Greg: What’s Latimer.ai’s business model? I know you’ve got a background in investing and have sold a company. Did you have any angel capital, venture capital, Series A, any of that? So funding is the first question, and then the business model.

John: I thought, based on my experience, that I would have access to funds. That turned out to be true. We’ve raised money through high-net-worth individuals and small institutions. The business model is subscription. OpenAI released a model that made a lot of sense. We’re priced in a very similar way, at $20 per month for individuals. Yet, we also have a very different offering. If you go to Latimer.ai, you have about 10 models to choose from. And then we have a subscription model for institutions, for schools, and organizations.

Greg: Tell us more about that. From the homepage of Latimer.ai, education and healthcare seem to be two verticals that you’re particularly focused on. Tell us about your work in those areas and any partnerships you have.

John: I’m not an EdTech guy, and we didn’t necessarily launch Latimer as an educational vehicle. I look at these large language models as almost like a Swiss Army knife, in that they can do a lot of different things for a lot of different people. But once we launched, we had so much inbound interest from higher ed that we decided to pursue it. We got a warm welcome from historically Black colleges and universities [HBCUs]. Miles College in Alabama actually subscribed three months prior to our January launch. They very quickly changed how they taught many classes to incorporate AI into the learning process. Instead of just assigning a composition or an essay, they asked students to bring their queries to class so they could discuss them, create a draft, and then submit a final product.

So they were ahead of the curve, and that early validation created interest from other schools. We’ve been able to get trials or contracts from schools ranging from Arizona State University and Southern New Hampshire University, Morehouse, Tennessee State, Johnson C. Smith, Bennett, and many others. We’re off to a good start for a young company.

Greg: That’s excellent. What about healthcare?

John: Early on, we were working with a healthcare company called Syncare. They’re buying policies from UnitedHealthcare and others to grow their business. Some of those risky policies generally have a lot of Black and Brown people in them. They wanted a way to create call center scripts that were empathetic to those customers, and they felt that Latimer could pull data about who the call was to and, on the fly, write a call center script tailored to that person. So that’s one of the things that we’ve done in healthcare.

Recently, we’ve been talking to United Way. During the government shutdown, they were overwhelmed by requests from people who normally rely on the government for health care or housing. Again, that’s more in their call center—just dealing with the volume and asking if there is a way that AI can assist with that. And recently, we’ve been asked by Southern New Hampshire University to work with their nursing certification students. They want to make sure that their nursing students are AI-literate or fluent. So we’re working with the university to create a course and then using Latimer as the interface to show how nurses should be aware of how AI shows up in workspaces now.

Greg: That’s great. And interesting, because when you look at AI development, at least post-ChatGPT, and you delve into healthcare in relation to Black and Brown folks, as you say, there are often certain biases baked in. In healthcare, perhaps Latimer could help mitigate the problem. Is that a correct assumption?

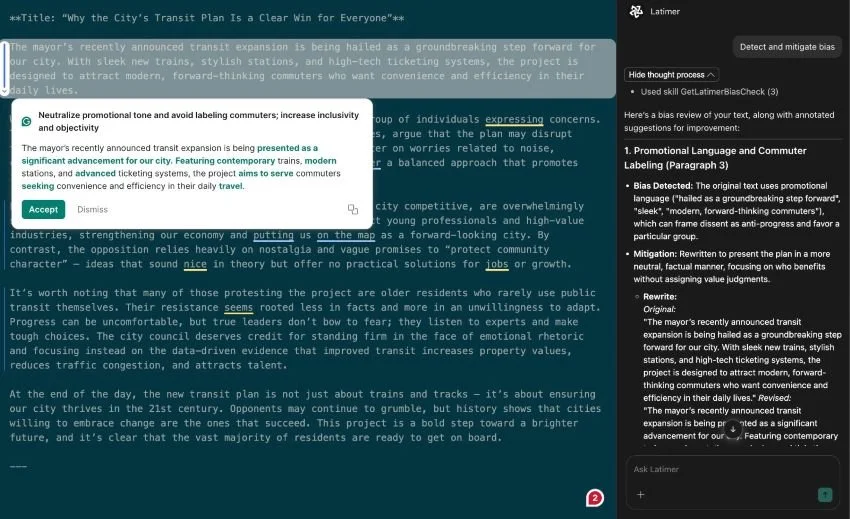

John: It can help. We have a partnership with Grammarly. Grammarly has traditionally been like a spell-check. Now they have a Latimer app in Grammarly that can analyze a document and highlight bias or a lack of inclusivity in written text. Within healthcare, if everybody opts in, you see something called passive listening, where an AI listens to conversations, such as between a nurse and a patient or a doctor and a patient. Then, as an administrator or an organization, you can review those conversations to detect whether your staff is interacting with people in ways that show bias. You can compare different conversations with different groups, and the machine itself, if you’re using pure machine learning, could be pretty objective. So maybe it gives them a more objective view than someone saying, “Hey, I’m talking to everybody the same.” Maybe look at it from a machine learning standpoint to see if the sentiment is really the same, because that’s what people respond to. If your caregiver is kind of cold to you, it adds another layer of stress, and maybe you’re not taking in your instructions properly because you feel tense in the interaction.

Greg: Right. So, hopefully, Latimer.ai can help with the bedside manner.

John: There you go. Yes, that’s it.

Grammarly + Latimer.ai

Greg: Okay. What are your plans moving forward with Latimer, especially in terms of your team’s talent development?

John: We’ve been blessed. This coming January will be year two since we launched, and we’ve been lucky to have essentially the same team and to have added new positions in sales and support. Having the same tech team is super helpful because of their familiarity with the code base and the organization's goals and mission.

We recently added a part-time engineer, a PhD student at Morgan State, and he’s been phenomenal. There’s tremendous talent here in the United States, in HBCUs and HBCU graduates, and other engineers as well. But I’ve been finding—I spend an awful lot of time on college campuses—that there’s a lot of talent that hopefully we can grow and put some of those folks to work.

Greg: I hear you. Now, speaking of college campuses and the use of AI in education, you’ve commented on some of the positive use cases. Of course, educators are also concerned about the use of AI, not just to assist learning and development, but also as a crutch for writing and conducting research. There are concerns about the overuse of AI and about the most appropriate use of AI for students' development at various stages. How would you address some of those concerns?

In part two, John Pasmore and I discuss his plans to develop avatars to engage with students, small vs large LLMs, world models, and the issue of AGI, Artificial General Intelligence, the holy grail of frontier models in fierce competition.