Teaching the AI Changes: John Pasmore on Learning and the Race to AGI

In part one of our two-part interview, John Pasmore and I discussed why he launched Latimer.ai, his business model, the organic development of relationships with schools and health care institutions, and his partnership with Grammarly. We continue our conversation in a similar vein, expanding our sphere of concern into the risks of AI and the uncertainty wrought by AGI—Artificial General Intelligence.

Part Two

Greg Thomas: Speaking of the use of AI in education, you’ve commented on some of the positive uses. Of course, educators are also concerned about the use of AI, not just to assist learning and development, but also as a crutch for writing and conducting research. There are concerns about the overuse of AI and about the most appropriate use of AI for students' development at various stages. How would you address some of those concerns?

John Pasmore: As the parent of an 18-year-old, I’m somewhat familiar with the misuse of AI, where, in some cases, students just want to get an assignment done as quickly as possible, and that doesn’t always lead to the learning outcomes that you’d like. I returned to Columbia University to pursue a degree in computer science. The purpose of a lot of those assignments is that, number one, they’re hard and, number two, they’re time-consuming. Because it’s the time that you spend, say, finding a bug in your program, where you’re really going through line by line and learning where to look for bugs or what could go wrong.

Now you can just copy and paste your entire code base into an AI and ask it, “Is there an error in this?” and it’ll tell you where the error is very quickly. The problem is: what are you learning? Some people compare it to a calculator. It’s a little bit different; it’s way smarter than a calculator. So the question is: if it’s always there, why do I have to have that type of learning? We’ll see. This is relatively new technology, so there isn’t any definitive path forward yet.

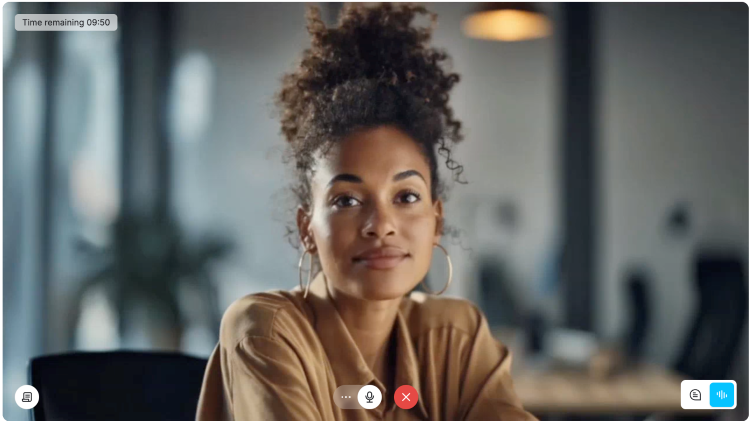

Some schools are taking a more Socratic approach. Meaning that if you ask it a question, it might ask you one in return. But you can imagine a young person who’s at home probably has access to a lot of different AIs, so if one is giving them a lot of friction, they’ll just change the channel, so to speak, because they just want the answer. One of the things that we’re trying to do, or will be testing shortly, is a fairly engaging human-like avatar. So maybe, as a user, you’ll feel more as if it’s a conversation, and if she or he doesn’t give you the answer right away but can frame the conversation and try to drill down into what you know or don’t know about the topic, maybe in that conversational sense you’ll feel more engaged to continue the conversation and do some of the actual learning.

Greg: That is so interesting. So when you talk about an avatar, you’re talking about an actual visual representation as if you’re speaking with it.

John: Yeah. I’ll just show you real quick. She kind of looks human.

Latimer.ai Avatar in development

Greg: Wow, look at that.

John: Yeah, so she’ll talk and interact and have a whole conversation. We couldn’t deploy this to everybody just yet because it’s computationally expensive, but she’s in alpha. We’re looking to partner with Intel to deploy her. They want people to use their new AI PC that has expanded capabilities and a more powerful processor. So there are ways we can work with them to put her in front of groups of people at first, and, just as with all computing, the cost of deploying something like her is coming down.

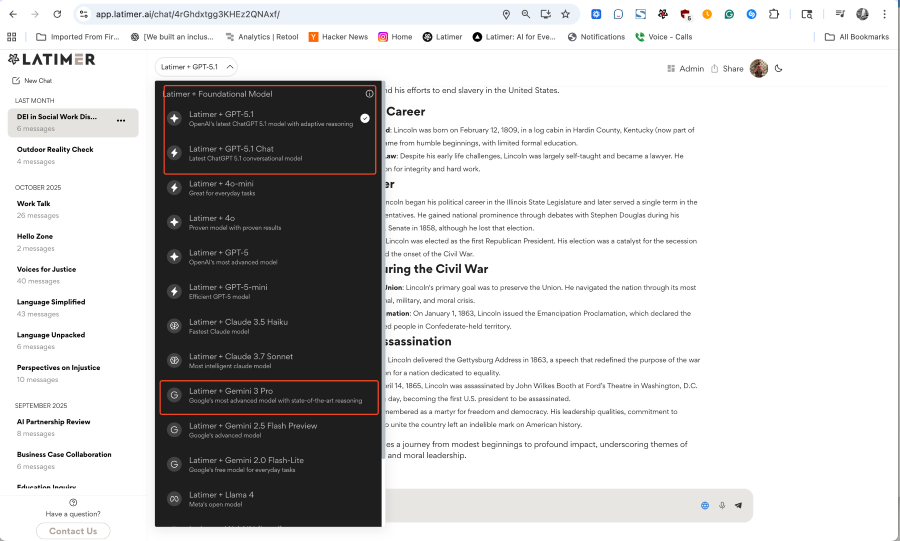

Greg: Right. All the best with that. What about the issue of AGI? I also want to talk about the issue of large LLMs versus small LLMs, personalized to your dataset or a company’s dataset. In terms of the way you see the industry moving, can you comment on that and then relate it to Latimer?

John: In the Latimer dropdown—as a premium subscriber, there are 10 models. Two are from Intel, our small language models that download to your computer. Then you can shut off the internet, or, if you’re working somewhere without internet, you can still use that model. We’re using that model with Southern New Hampshire University in their robotics class, where a robot may venture somewhere without internet access and still needs to work. In this case, we’re using the model for voice command, so it can convert your voice into text and then understand what you’re asking it to do from that text.

I think progress will continue in many different ways at the same time. Small language models are great; if you’re concerned about your privacy, it can really enhance it. We would like to further develop a product where, if you don’t want your chat history anywhere else than your hard drive, then that’s fine—you can keep it on your hard drive and be completely private.

In terms of AGI or artificial general intelligence, there’s a robust online conversation and a robust conversation at a lot of institutions, number one, about whether it’s even possible to create it, and number two, what would happen if it were created. It’s such an almost mind-bending advance that it’s hard to imagine what technology like that could do. Given the amount of money being invested and the amount of brain power—Meta and others are paying some of the best and brightest minds incredible sums to work on this issue—it’s almost like a Manhattan Project in the ’40s.

So even if they don’t reach what everybody considers the definition of AGI, we already have very smart AI, and it’s only going to become smarter. When we talk about large language models, the implied architecture may not be the same. That architecture is continuing to evolve as well.

Greg: Related to the architecture, I’m wondering about large language models—emphasis on language—and then there’s the issue of or the move toward world models, where there are spatial aspects and visual dimensions, too. Any comments on that in relation to Latimer?

John: We consider ourselves, at least at this stage, a fast follower in terms of we can—well, we just deployed GPT 5.1 and Google Gemini 3 in our dropdown. We can get those into Latimer fairly quickly, following what I would consider the state of the art in public LLMs. If somebody’s shooting for artificial general intelligence, the idea is that the machine understands the world as we see it, or the physical world in general, increases its overall intelligence, and its ability to think independently. The idea with AGI is that this thing can think independently of humans, so it should be able to look at the world and learn about it independently of us. A child is curious, plays with objects, learns about gravity—if I throw it, this is what happens—and understands a lot.

Yann LeCun, the former head of Meta’s AI, talks about how much information even a child is ingesting through their eyes. It’s a phenomenal amount of data. Because models don't have access to the physical world, it’s hard for them to understand everything. They’re just kind of guessing at words versus truly understanding the world. That’s all underway, and we have what we see through these public models. Then you can imagine that, for Palantir and others working for the NSA or the Defense Department, there’s a whole other level of funding and efforts to make AI smarter that we don’t really see.

Greg: Well, John, I definitely appreciate your time, as do the readers of our newsletter. So thanks, and happy holidays.

John: Alright, you too, man.

2025 Outchorus

This is our last post for 2025. We’re going to reflect and replenish our reserves, and hope you’ll take time to do the same. Just considering the epochal impact of AI alone, increasing intelligence in leaps and bounds, taking over capabilities that just recently we thought were exclusively human, 2026 promises to be a year of exciting yet frightening changes. We rededicate ourselves to providing insights for leaders through the creative lens of jazz, a cultural technology for managing and leveraging complexity like none other. We appreciate you continuing to adventure with us, and hope you’ll invite those you know who might benefit from such insights to take this journey, too.